Upstage AI Lab 2기

2024년 3월 6일 (수) Day_058

난이도 (중)

# 1. Convolutional Neural Networks를 직접 구성하여 99% 이상의 성능을 내는 MNIST 분류기 만들기

(1) Convolution 연산, Flatten 연산, Fully Connected 레이어, Activation 연산만을 가지고 MNIST 분류기 만들기

(2) (1)에 Max Pooling, Average Pooling 레이어를 추가하여 MNIST 분류기 만들기

(3) (2)의 Pooling연산을 제거하고 Adaptive Pooling을 적절히 활용하여 MNIST 분류기 만들기

(1) Convolution 연산, Flatten 연산, Fully Connected 레이어, Activation 연산만을 가지고 MNIST 분류기 만들기

class CNN(nn.Module):

def __init__(self, num_classes):

super(CNN, self).__init__()

self.num_classes = num_classes

# convolution

self.layer = nn.Sequential(

# input shape (batch, 1, 28, 28)

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=5),

# output shape (batch, 16, 24, 24)

nn.ReLU(),

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=5),

# output shape (batch, 32, 20, 20)

nn.ReLU(),

nn.Flatten(),

nn.Linear(32*20*20, self.num_classes),

nn.LogSoftmax(dim=1),

)

def forward(self, x):

pred = self.layer(x)

return pred

def weight_initialization(self):

for m in self.modules():

if isinstance(m, nn.Conv2d) or isinstance(m, nn.Linear):

nn.init.kaiming_normal_(m.weight)

nn.init.zeros_(m.bias)

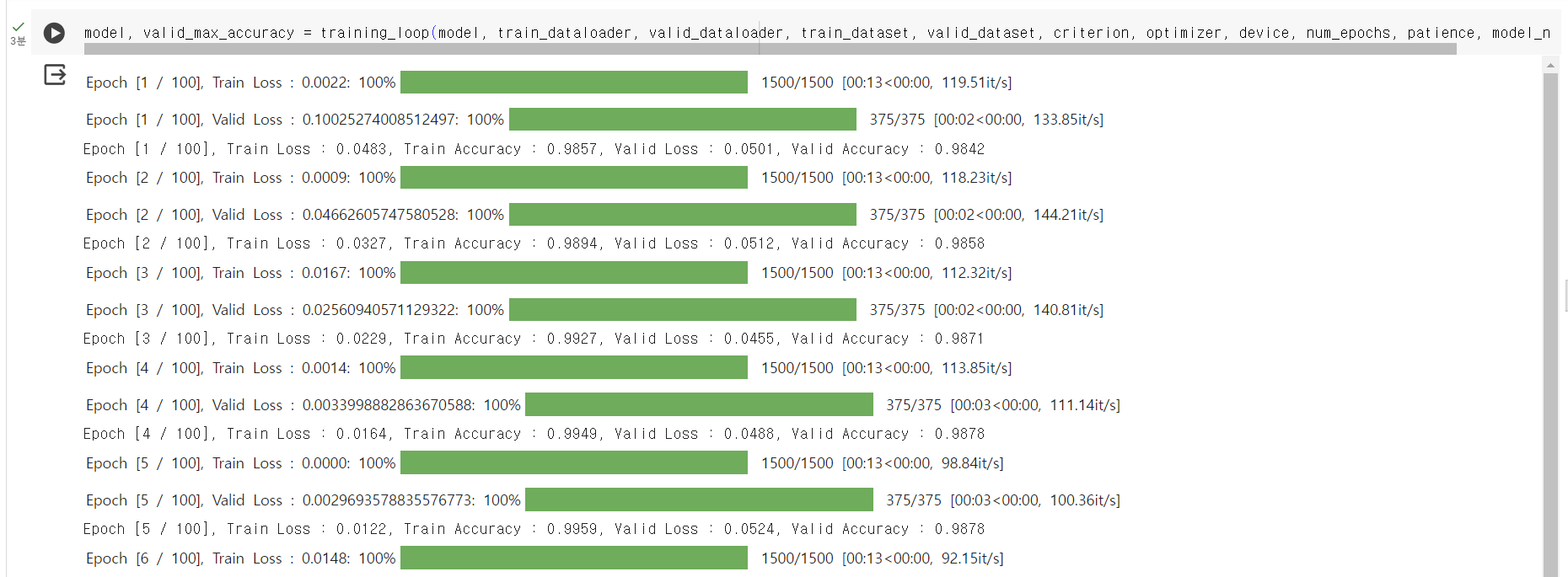

exp1

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=5), # output shape (batch, 16, 24, 24)

nn.ReLU(),

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=5), # output shape (batch, 32, 20, 20)

nn.ReLU(),

nn.Flatten(),

nn.Linear(32*20*20, self.num_classes),

nn.LogSoftmax(dim=1),

Valid max accuracy : 0.9885833333333334

NG

(2) (1)에 Max Pooling, Average Pooling 레이어를 추가하여 MNIST 분류기 만들기

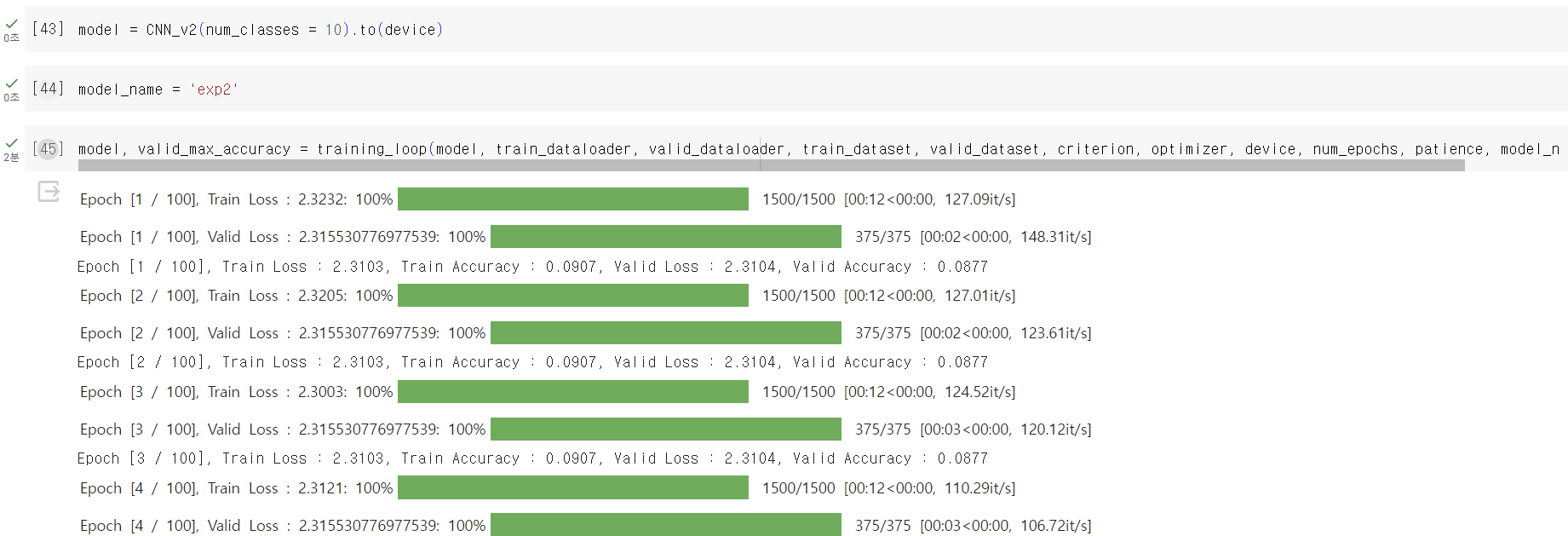

exp2

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=5), # output shape (batch, 16, 24, 24) nn.ReLU(),

nn.MaxPool2d(kernel_size=2), # output shape (batch, 16, 12, 12)

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=5), # output shape (batch, 32, 8, 8) nn.ReLU(),

nn.Flatten(),

nn.Linear(32*8*8, self.num_classes),

nn.LogSoftmax(dim=1),

Valid max accuracy : 0.08766666666666667

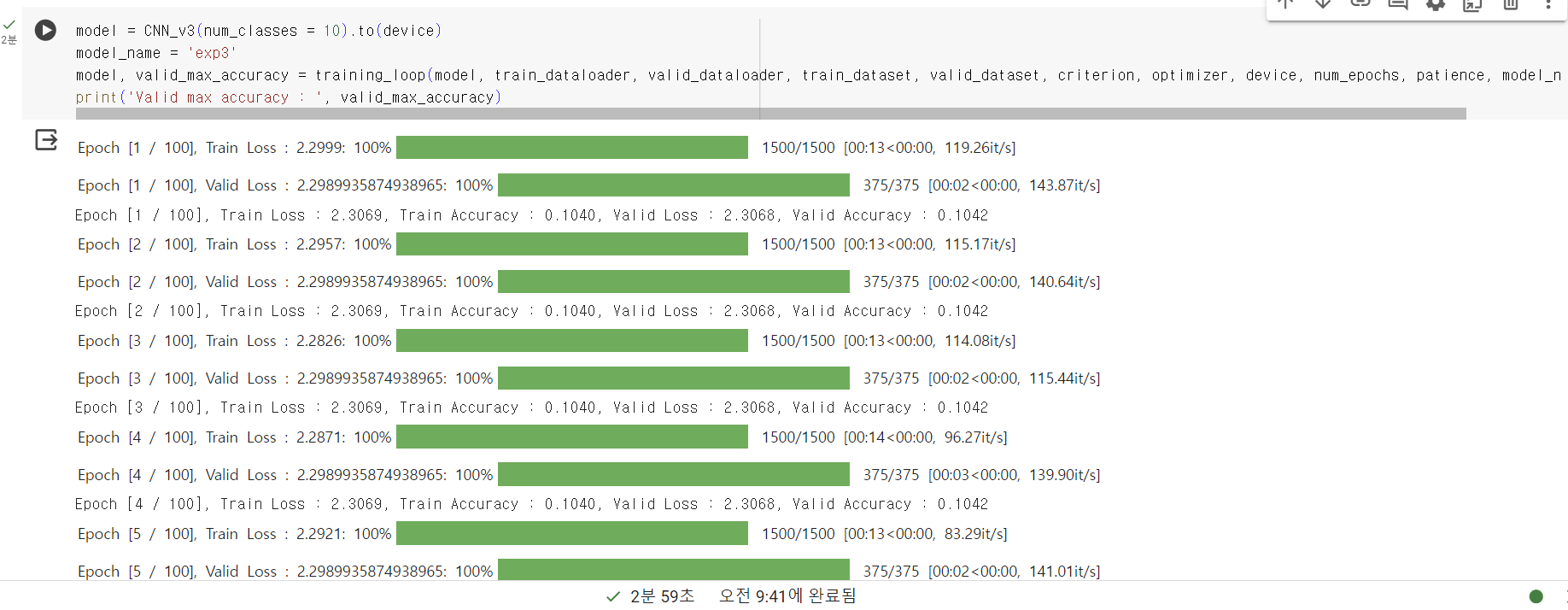

exp3

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=5), # output shape (batch, 16, 24, 24) nn.ReLU(),

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=5), # output shape (batch, 32, 20, 20)

nn.ReLU(),

nn.MaxPool2d(kernel_size=2), # output shape (batch, 32, 10, 10)

nn.Flatten(),

nn.Linear(32*10*10, self.num_classes),

nn.LogSoftmax(dim=1),

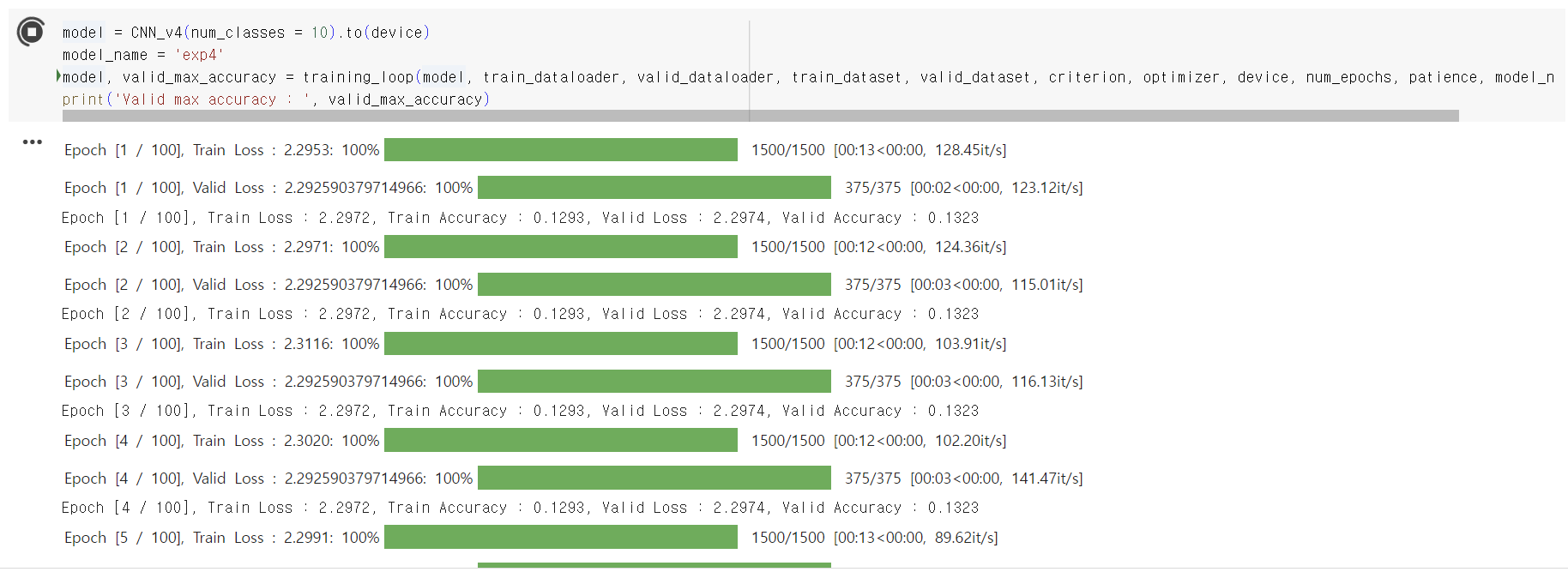

exp4

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=3), # output shape (batch, 16, 26, 26) nn.ReLU(),

nn.MaxPool2d(kernel_size=2), # output shape (batch, 16, 13, 13)

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3), # output shape (batch, 32, 11, 11) nn.ReLU(),

nn.Flatten(),

nn.Linear(32*11*11, self.num_classes),

nn.LogSoftmax(dim=1),

Valid max accuracy : 0.08883333333333333

exp5

nn.Conv2d(in_channels=1, out_channels=16, kernel_size=5), # output shape (batch, 16, 24, 24) nn.ReLU(),

nn.AvgPool2d(kernel_size=2), # output shape (batch, 16, 12, 12)

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=5), # output shape (batch, 32, 8, 8)

nn.ReLU(),

nn.Flatten(),

nn.Linear(32*8*8, self.num_classes),nn.LogSoftmax(dim=1),

Valid max accuracy : 0.07

'Upstage AI Lab 2기' 카테고리의 다른 글

| Upstage AI Lab 2기 [Day059] 수강생 TODO #02 - Pytorch Data Loading - 수정작업 (0) | 2024.03.07 |

|---|---|

| Upstage AI Lab 2기 [Day058] 수강생 TODO #02 - Pytorch Data Loading (0) | 2024.03.06 |

| Upstage AI Lab 2기 [Day058] CNN 구현 (0) | 2024.03.06 |

| Upstage AI Lab 2기 [Day057] PyTorch 실습 - Tensor Manipulation (1) | 2024.03.05 |

| Upstage AI Lab 2기 [Day057] PyTorch (0) | 2024.03.05 |