자료 : https://www.youtube.com/playlist?list=PLfaIDFEXuae2LXbO1_PKyVJiQ23ZztA0x

Query Translation

translate user's query to improve retrieval

why? user query is ambiguous → if query is poorly written proper document cannot be retrieved

(higher level)

step-back question

↑

Quesiton → re-writing (Multi-query, RAG-fusion)

↓

sub-question

출처 : https://github.com/langchain-ai/rag-from-scratch/blob/main/rag_from_scratch_5_to_9.ipynb

1. Multi-Query

2. RAG-fusion : rank retrieved documents

reciprocal_rank_fusion

list of queries → each retrieval → list of documents per query

3. Decomposition : decompose into sub problems

a. answer recursively

IR-CoT (Interleave Retrieval with CoT) : combines CoT reasoning with retrieval

use previous question and answer pairs to solve the next problem. build up solutions.

{question} - the question to solve in this step

{q_a_pairs} - prior Q&A pairs

{context} - retrieved documents from previous Q&A pairs

b. answer individually and concatenate

may be used for a set of parallel questions

ref :

1. Least-To-Most Prompting Enables Complex Reasoning In Large Language Models

https://arxiv.org/pdf/2205.10625

2. Interleaving Retrieval with Chain-of-Thought Reasoning for Knowledge-Intensive Multi-Step Questions

https://arxiv.org/pdf/2212.10509

4. Step-back prompting

using few shot of original question & step-back(≒ more abstract) question pairs to produce a step-back question for the given question

{normal_context} : context retrieved for the original question

{step_back_context} : context retrieved for the step-back question

ref :

1. Take A Step Back: Evoking Reasoning Via Abstraction In Large Language Models

https://arxiv.org/pdf/2310.06117

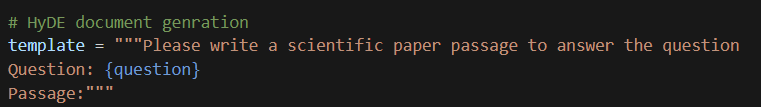

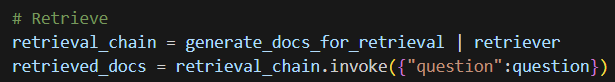

5. HyDE

Documents : dense and large chunks

Questions : sparse and low in quality ^^;

map questions into document space using/generating hypothetical documents

→ intuition : the hypothetical documents are more likely to be closer (in the embedded document space) to the document to be retrieved than the sparse and raw question.

pipe the hypothetical document into the retriever and fetch related docs in the index

this can be tuned to suit the domain in question

ref:

1. Precise Zero-Shot Dense Retrieval without Relevance Labels

https://arxiv.org/pdf/2212.10496

'Studying AI' 카테고리의 다른 글

| RAG from scratch (4) (0) | 2024.06.04 |

|---|---|

| RAG from scratch (3) (0) | 2024.06.03 |

| RAG from scratch (1) (0) | 2024.05.31 |